I recently came across this very interesting rounding issue in MS SQL server.

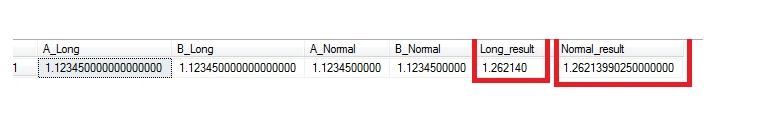

Originally I thought in order to keep computation in highest accuracy possible, I should use the max allowed precision, i.e. on SQL server a decimal(38,18). However after definition of a computed field which is just a multiply of two decimal(38,18) fields, surprisingly the result is rounded, the accuracy is even worse than the multiply of two decimal(20,10) fields.

Below is the example:

CREATE TABLE [dbo].[demo_table](

[A_Long] [decimal](38, 18) NULL,

[B_Long] [decimal](38, 18) NULL,

[A_Normal] [decimal](20, 10) NULL,

[B_Normal] [decimal](20, 10) NULL,

[Long_result] AS ([A_long]*[B_Long]),

[Normal_result] AS ([A_normal]*[b_normal])

) ON [PRIMARY]

— insert demo figures

insert into demo_table(A_Long,B_Long,A_Normal,B_Normal) values(1.12345,1.12345,1.12345,1.12345);

–check result, you may discover that the result is rounded to 1.26140!

select * from demo_table

So what happened? The best post I can find so far is this:

http://dba.stackexchange.com/questions/41743/automatic-decimal-rounding-issue

The solution:

The simplest solution I use is reduce the precision, like demonstrated in above demo_table, change column precision to (20,10) instead of (38,18)!